How to secure cloud in cloud native era

Introduction

When it comes to cloud security there are approaches

that rely on same tools that worked for on premise infrastructure. Those approaches are commonly referred as on premise security posture. We are seeing that more companies are

making cloud native applications. For cloud security, there is a need to have a shift from on

premise security posture to a cloud native approach to security. We need to take an approach that relies more on native cloud

primitives offered by the cloud provider.

Cloud native way of

security

Cloud infrastructure has become the de facto infrastructure

for hosting applications and workloads that enable a business to provide value.

Cloud has proved to be a value multiplier and more and more businesses are

trying to tap into the cloud value. In terms of the

applications, companies are shifting to cloud native approach for application

architecture. This shift towards cloud native applications is the right

approach as this helps companies realize the cloud value. The cloud native way of security is taking the cloud native approach for the applications and applying it for cloud security. For example, the cloud native application is architected to use cloud platform as a resource and not just a hosting environment, similarly cloud native security is tapping into cloud platform for providing security posture.

|

| Note: The left side of the cloud native security shots the conceptual view of security in depth. The implementation architecture might show each layer as a separate entity at the same level. |

Why we need Cloud native way of security

It is all about the understanding and the approach that is

the result of the understanding. There are two

mindsets when it comes to cloud security. One mindset is about looking at what has worked at on premise data center

and replicating it to cloud. The other mindset is looking at cloud as a platform for resources that provides components for cloud security. The on premise mindset typically involves looking

the resources as virtual machines that are part of a network and are joined to a

domain. The security tools that have evolved over the years for on premise

resources essentially addresses the same scheme. These security tools involve

(and not limited to):

- Relying on host operating systems to provide security by managing and restricting incoming and outgoing traffic flow to/from ports. A good example is a windows firewall.

- Recording all the traffic that is flowing in and out of the network and then analyzing the traffic to flag any suspicious traffic flow. This also give us idea that security actions are typically thought of as reactive action and not proactive actions.

The above two points are just the two examples of security tools that are employed by on premise approach. The intention here

is to point out the mindset where security is delegated to tools and not built

in the resources/workloads that are part of an on premise establishment.

The reason that those tools were designed that way was

because the cloud providers did not offered alternatives to support the cloud

native mindset. If we go back a couple of years ago and image that we had to

host a cloud native application, we still had to rely on the tools that worked

before.

Now the time has changed. The cloud providers have addressed

the lack of security tools as part of the cloud infrastructure. We have entered an

era where native cloud security tools or cloud primitive tools have evolved to

fully support the cloud native security. The need is to create cloud based

solution architectures that leverage the cloud primitive security capabilities.

For example:

·

Instead of relying on windows firewall, NSG (in

case of Azure) should be employed. That will also mean to evolve the network

architectures that designed for clouds to support that.

·

Instead of using security tools that record all

the traffic, tools like Azure Sentinel should be employed.

Case for Cloud native

way of security

Shift-Left Security:

With the cloud native way of security approach there will be more push towards

achieving security using the shift-left approach. This means that instead of

security being addressed something as wrapper, it will be addressed as

a feature that is "baked in" into the architecture from the beginning. That is the very definition of

the Shift-Left approach.

Security by platform:

As more and more systems are being automated and moved to cloud, more and more

threats are emerging. The threats are becoming multidimensional in nature. The

cloud-native way of security is better equipped to cope with the present and

emergent threats.

Defense in Depth:

Cloud native way of security allows multiple mitigations applied against

threats. If we replace the cloud native way of security with on premise way of

security, then we would need to have multiple layers of security that we have

to stand up and maintain either by ourselves or the vendors.

Scale: Cloud

native way of security is platform based, so it inherently scales within the

platform. To understand this, imagine we are back in 2000s. The data that was

being generated at that time was not much in scale what we have now. For that time the on premise

infrastructure was provisioned to cater the needs of that footprint of data.

Now we are in an era of “big data” and to support the scale requirement for that

enormous amount of data, we would need more dedicated resources.

Focus on business

value: With Cloud native way of security, the focus of business is shifted

from security and business to only business. This also means that the computing

base that a business is responsible for has been reduce. This makes the systems

more secure as there is less computing base that a business needs to protect.

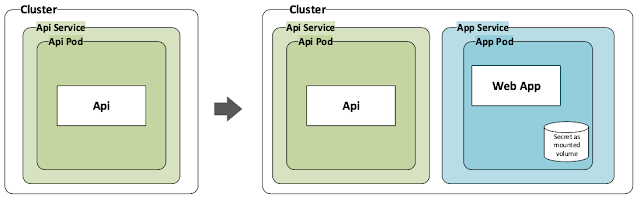

Containerization (aka

Kubernetes) revolution: With the advent of containerization more and more

applications have shifted towards cloud native architecture. This shift means

that the classical cloud security model needs a shift as well towards cloud

native way of security.

Example Components of

Cloud Native Security Architecture

Azure Sentinel

Each cloud provider has developed its own set

of tools that can be defined in the realm of cloud native way of security. From

Azure’s side, the tool that implements the cloud native way of security is

“Azure Sentinel”. Azure Sentinel is a cloud-native SEIM that leverages AI for

threat protection. The way Azure Sentinel works is that it uses Data

Connectors. The Data Connectors hook into various Azure services such as Firewall. The Data

Connectors then feed in data to Azure Sentinel. Azure Sentinel runs the security related

tasks and then shows the security landscape onto a dashboard. More information

on Azure Sentinel can be found here.

DDoS Protection

This is a platform level service that Azure offers. As

mentioned earlier, the cloud providers are now answering to the call of

providing cloud native security tools. Azure DDoS is one of the newer service

that helps against the DDoS targeting cloud resources.

Firewalls

Firewalls is an Azure service that helps protect the virtual

networks. Since it is a managed service offering there are advantages that come

with it. These advantages include real time analysis of traffic and then

feeding in the data to Azure Sentinel.

Web Application

Firewall

For the applications that are hosted on Azure App services,

the web application firewall is essential for it security. For a truly cloud

native application that is hosted on App service, just adding the Web

Application Firewall will make it cloud native for security.

Azure NSG

NSG is a primitive (native) service offering that helps us

implement traffic rules for incoming and outgoing traffic. The beauty of NSG is

that it acts like windows firewall but can be applied to various resources such as subnet. In case of subnet if NSG is added then the NSG rules will be enforced on all the VMs and other resources that are part of that particular subnet.

Conclusion

In this article we have looked at the two approaches to

cloud security. As we have seen from the tools and the conceptual architecture there are Managed Services that are offered by the cloud providers.

Using Managed Services for cloud security infrastructure is the step in

the right direction to achieve cloud-native security.